I am… research about learned representations, decision-making, and reasoning at OpenAI.

My PhD thesis was on Intelligent Agents via Representation Learning (pdf, video), advised by Antonio Torralba and Phillip Isola at MIT.

Research. I am interested in the two intertwined paths towards generalist agents: (1) agents (pre)trained for multi-task (2) agents that can adaptively solve new tasks. I believe that representation is the key that connects learning, adaptation, and capabilities.

Misc. I was a core PyTorch dev (ver. 0.2-1.2), coded some open-source machine learning projects

Email: tongzhou _AT_ mit _DOT_ edu

Selected Publications *indicates equal contribution; full list

| Representations of generalist agents | What is machine learning really learning? |

|

The Platonic Representation Hypothesis [ICML 2024 (Position Paper)][Project Page] [arXiv] [Code] Minyoung Huh*, Brian Cheung*, Tongzhou Wang*, Phillip Isola* |

Hypothesis

All strong AI models converge to one representation that fits reality, regardless of data, objective, or modality. rephrased; see paper for evidences and more. |

|||||

|

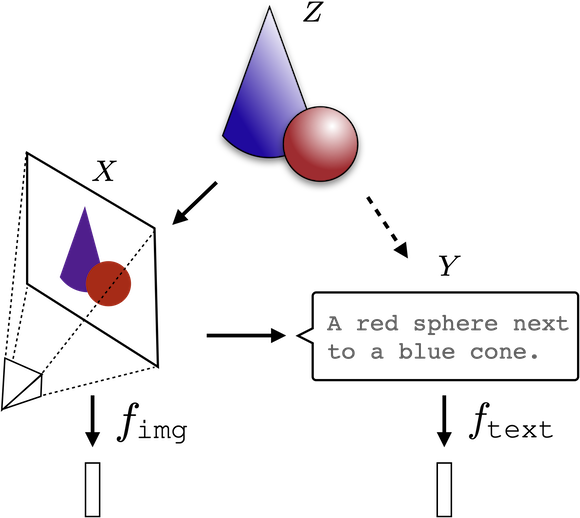

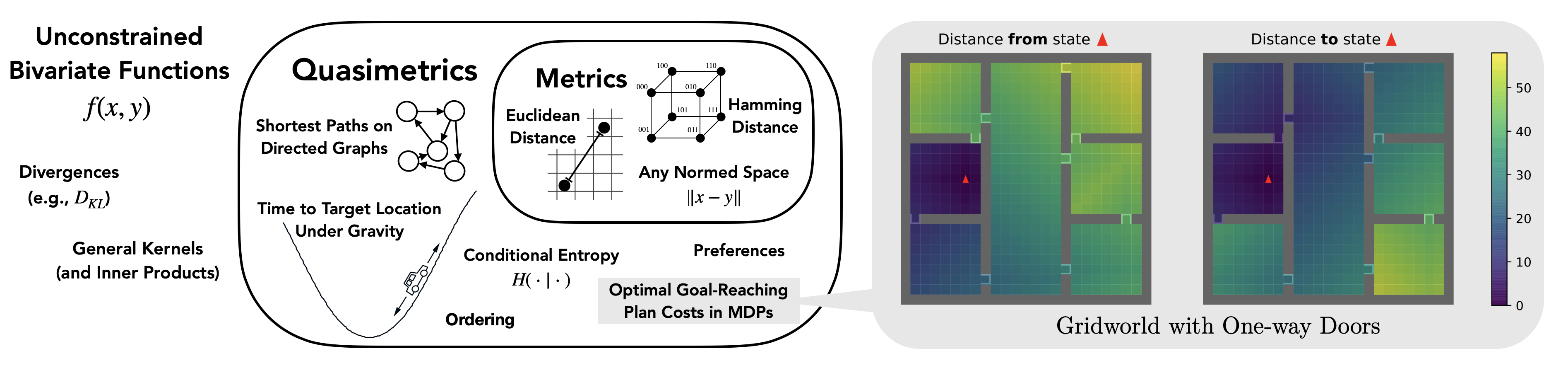

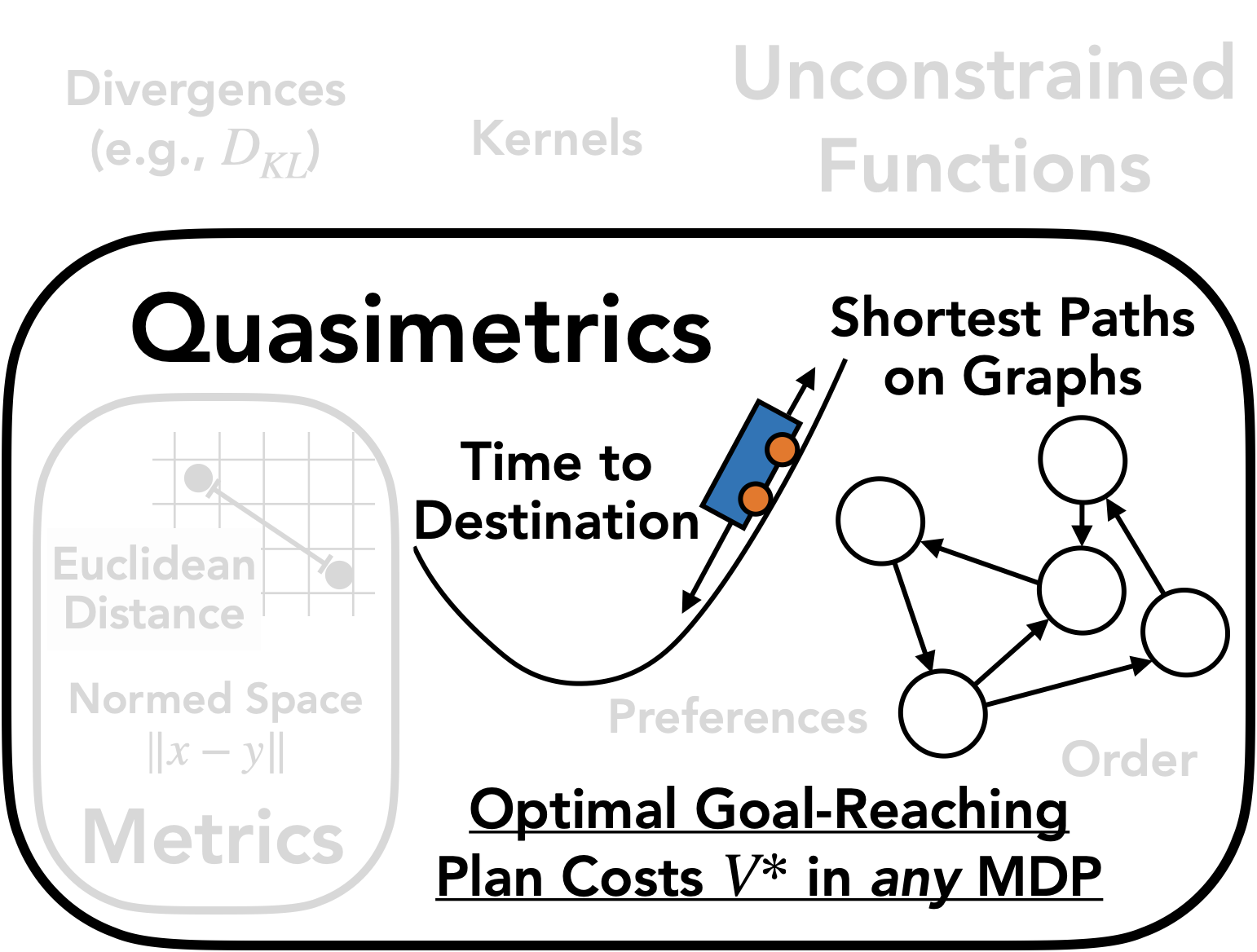

Optimal Goal-Reaching Reinforcement Learning via Quasimetric Learning [ICML 2023][Project Page] [arXiv] [Code] Tongzhou Wang, Antonio Torralba, Phillip Isola, Amy Zhang |

|

|||||

|

Denoised MDPs: Learning World Models Better Than The World Itself [ICML 2022] [Project Page] [arXiv] [code] Tongzhou Wang, Simon S. Du, Antonio Torralba, Phillip Isola, Amy Zhang, Yuandong Tian |

||||||

|

On the Learning and Learnability of Quasimetrics [ICLR 2022] [Project Page] [arXiv] [OpenReview] [code] Tongzhou Wang, Phillip Isola |

|

|||||

|

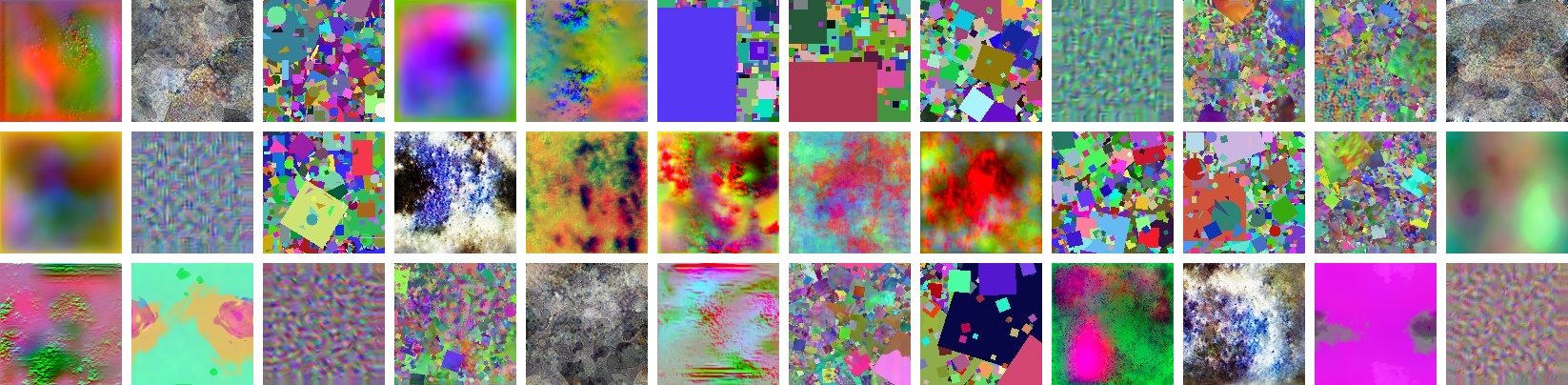

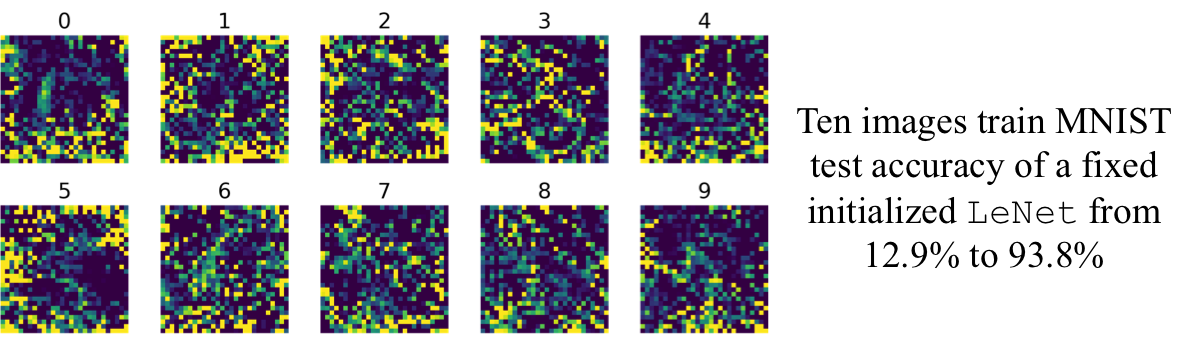

Learning to See by Looking at Noise

[NeurIPS 2021] [Project Page] [arXiv] [code & datasets] Manel Baradad*, Jonas Wulff*, Tongzhou Wang, Phillip Isola, Antonio Torralba |

|

|||||

|

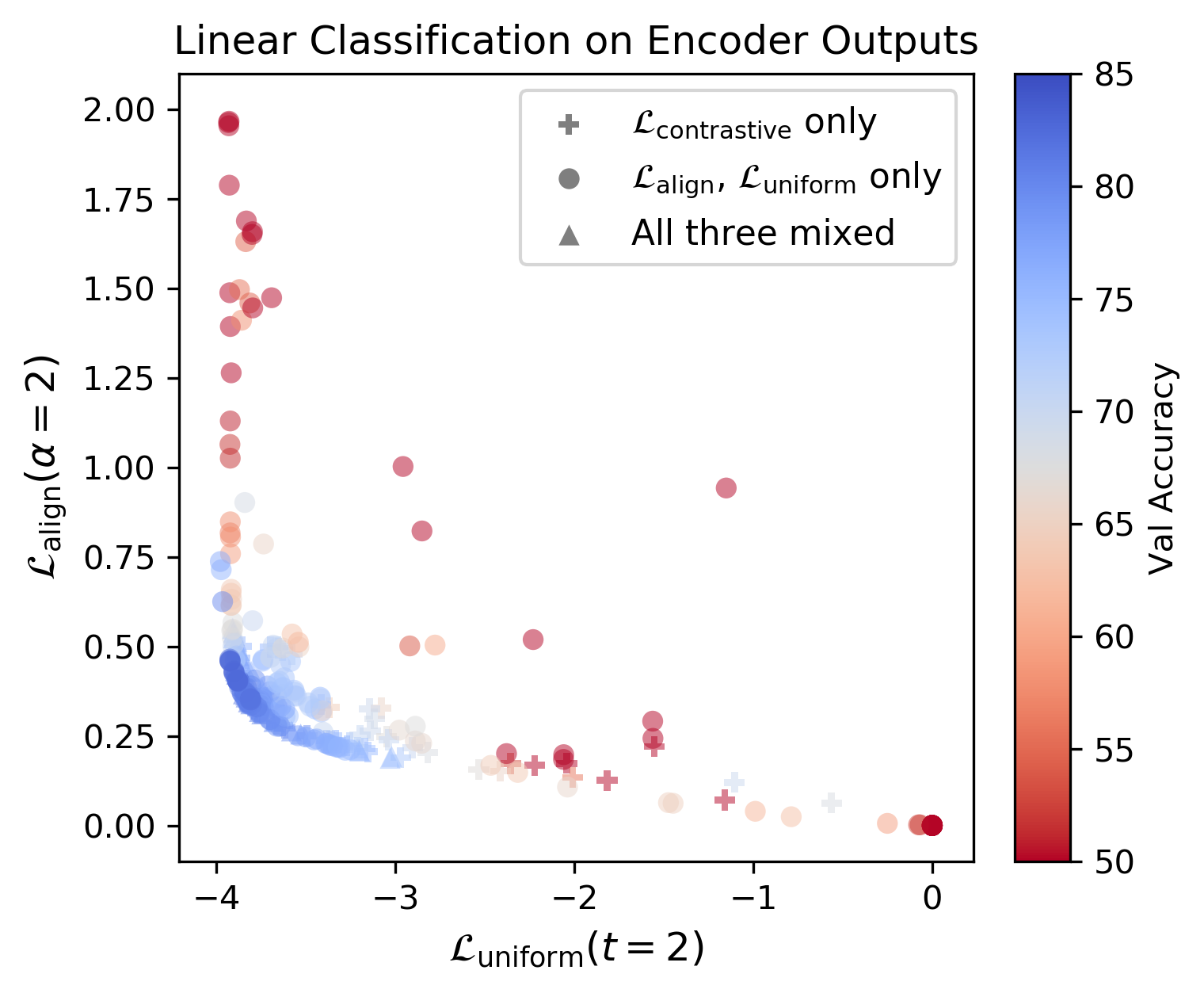

Understanding Contrastive Representation Learning through Alignment and Uniformity on the Hypersphere [ICML 2020] [Project Page] [arXiv] [code] Tongzhou Wang, Phillip Isola |

# bsz : batch size (number of positive pairs) # d : latent dim # x : Tensor, shape=[bsz, d] # latents for one side of positive pairs # y : Tensor, shape=[bsz, d] # latents for the other side of positive pairs def align_loss(x, y, alpha=2): return (x - y).norm(p=2, dim=1).pow(alpha).mean() PyTorch implementation of the alignment and uniformity losses

|

|||||

|

Dataset Distillation [Project Page] [arXiv] [code] [DD Papers] Tongzhou Wang, Jun-Yan Zhu, Antonio Torralba, Alexei A. Efros |

|

Quasimetric geometry

Quasimetric geometry